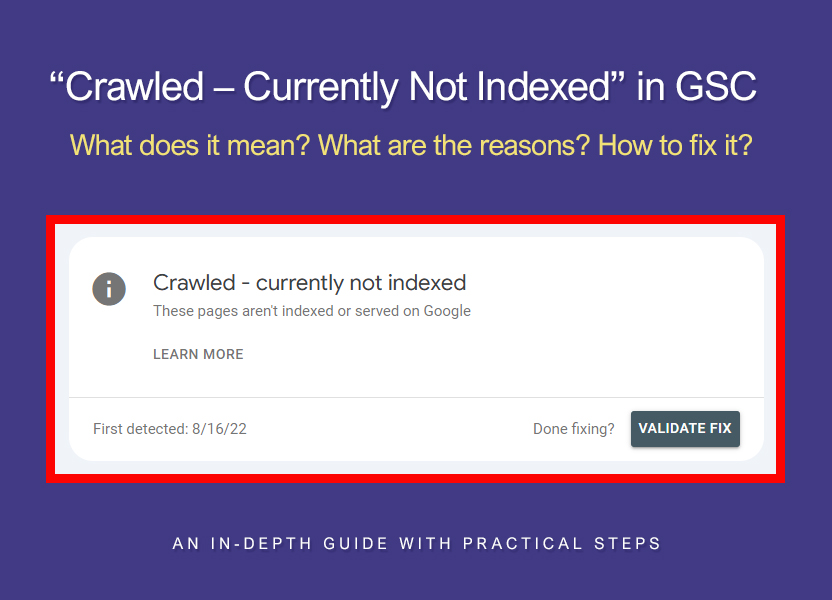

This guide provides a detailed breakdown of the “Crawled, currently not indexed” status in Google Search Console. It can tell you a lot about your site — if you know what it shows, when it appears, and what it actually means.

From this guide, you will learn:

- What the status “Crawled, currently not indexed” means

- How “Crawled, currently not indexed” differs from “Discovered, currently not indexed”

- When “Crawled, currently not indexed” is perfectly normal

- The reasons for “Crawled, currently not indexed” on content pages

- When “Crawled, currently not indexed” Means Trouble — and What’s Causing It

- How to fix “Crawled, currently not indexed”

After spending countless hours analyzing websites affected by Google penalties, I noticed a recurring pattern — the “Crawled, currently not indexed” status often plays a central role in diagnosing these problems.

“Crawled, currently not indexed” is one of those indexing statuses that might look harmless — especially when it applies to technical or utility pages that weren’t supposed to be indexed anyway. But when content pages get flagged with this status (blog posts) it can be an early symptom of serious issues that may later destroy your organic traffic.

And when hundreds or even thousands of content pages receive the “Crawled, currently not indexed” status, it may indicate that Google has a low overall assessment of your website. Google crawls your content but refuses to include it in the index, judging it not valuable enough to be indexed. Why this happens — and what you can do about it — is what we’ll explore below.

“Crawled, currently not indexed” means that Googlebot successfully crawled the page — it made an HTTP request, retrieved the HTML, rendered the JavaScript (if present), and passed the content to Google’s internal evaluation systems. But after analyzing the data, Google decided not to include the page in the index.

At first, this might seem like a system bug — but it’s not. It’s not the server’s fault and not a technical glitch. It’s a judgment call: the result of Google assessing the content and deciding to hold it out of the index.

Google has limited indexing resources. Crawling a page doesn’t mean it will be indexed. In fact, especially since the explosion of AI-generated content, Google doesn’t even aim to index everything. If a page is considered low-value, irrelevant, or unhelpful, indexing is postponed — or skipped entirely.

I’ve often heard the opinion that resource saving — specifically crawl budget limitations — forces Google to assign the “Crawled, currently not indexed” status, refusing to index the page. In reality, that’s not the case.

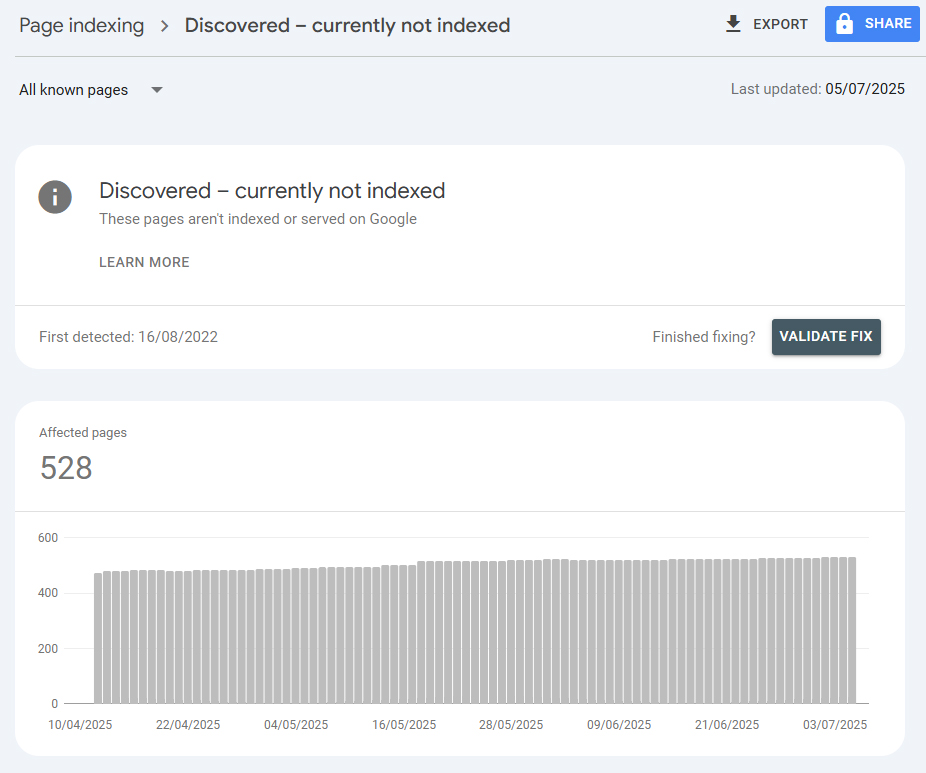

If a website is genuinely experiencing crawl budget issues across the entire domain, the affected pages will be labeled differently — with the status “Discovered, currently not indexed”. This means Google has learned about the existence of the page but hasn’t yet attempted to crawl it. In contrast, “Crawled, currently not indexed” means the page has already been crawled.

So why would Googlebot decide not to crawl a discovered page, even though it knows about it? First, it’s important to understand that this is not a final decision but a temporary one. Over time, Google may return to the page and crawl it. This often happens when a large number of pages are uploaded to the site at once, and Google delays crawling by placing those pages in a queue. During that time, they will show the status “Discovered, currently not indexed”.

The second reason could be technical issues that occurred during the attempt to crawl the page — for example, server errors. Or, when the server responds too slowly, and crawling multiple pages could further degrade performance. In such cases, Google may postpone the crawl.

The third reason is the very crawl budget limitation mentioned earlier. But this is quite rare and typically applies only to very large websites with over half a million pages. As Google’s Gary Illyes officially stated: “90% of websites don’t need to think about crawl budget”.

Another reason is the poor quality of the entire website, after which Google decides not to waste resources on crawling and marks such pages as “Discovered, currently not indexed”. This is a rather curious case because Google isn’t evaluating the current page (since it hasn’t crawled it yet) but is predicting its likely quality based on the analysis of other pages on the website. That is, it’s enough for Google to crawl part of the site to conclude that further crawling is unnecessary — because there’s a high probability that the rest of the pages are of similarly low quality.

NOTE: What’s interesting is that even if you manually request the crawling of such a page, it may be crawled but still not indexed and end up with the status “Crawled, currently not indexed”. If that’s the case, it confirms that the reason lies in the low content quality and the perceived value of the page.

In fact, when Google automatically refuses to index certain pages and labels them with the status “Crawled, currently not indexed”, it can actually be beneficial for your website.

Google is not supposed to index absolutely every page of your site. This behavior protects your site from having unimportant pages enter the index -pages that were never meant to be indexed in the first place.

Specifically:

- Pagination pages (?page=2, ?page=3, etc.) — these contain no unique content and simply duplicate core sections. Their indexing only dilutes the site’s structure.

- Internal search result pages (/search?q=…) — Google doesn’t index these by default, since their content is unstable and depends entirely on the query.

- Feed pages (/feed/, /post/feed/) — they duplicate content intended for subscribers, not for search results.

- Product filter pages (/shop?color=blue&size=M) — especially when there are many filters and the page content changes minimally. These can generate thousands of URLs with nearly identical content.

- CMS utility pages (/cart/, /checkout/, /admin/, /wp-json/) — these are functional parts of the website and not meant to appear in search.

- Calendar and archive pages (/2025/07/, /category/news/page/4) — they don’t contain unique content; they merely group together posts that are already indexed.

- Sorting parameter URLs (?sort=asc, ?orderby=date) — if proper canonical tags are not set, these create duplicate content and interfere with prioritizing the right pages.

- Empty or unfinished pages — these are templates automatically generated by your CMS, but no content has yet been uploaded to them.

So if you find that your “Crawled, currently not indexed” pages mostly fall into these categories, it’s absolutely normal. In fact, that’s how it should be.

What I do recommend, however, is reviewing the pages listed under #8 — you might consider deleting some of them or setting up a 301 redirect to a more relevant page.

Let’s now look at the reasons why content pages — which were supposed to be indexed — end up with the status “Crawled, currently not indexed”.

This is often perceived by webmasters as a problem, but in reality, the situation can vary greatly and depends on many different factors.

For example, when a new page is published, it’s perfectly natural for Google to first crawl and analyze its content and then decide whether or not to include it in the index. This is entirely normal. Even if the page is still not indexed a couple of weeks later, it’s not necessarily a cause for concern — it may still get indexed later. This is especially common on newly created websites with low or zero traffic.

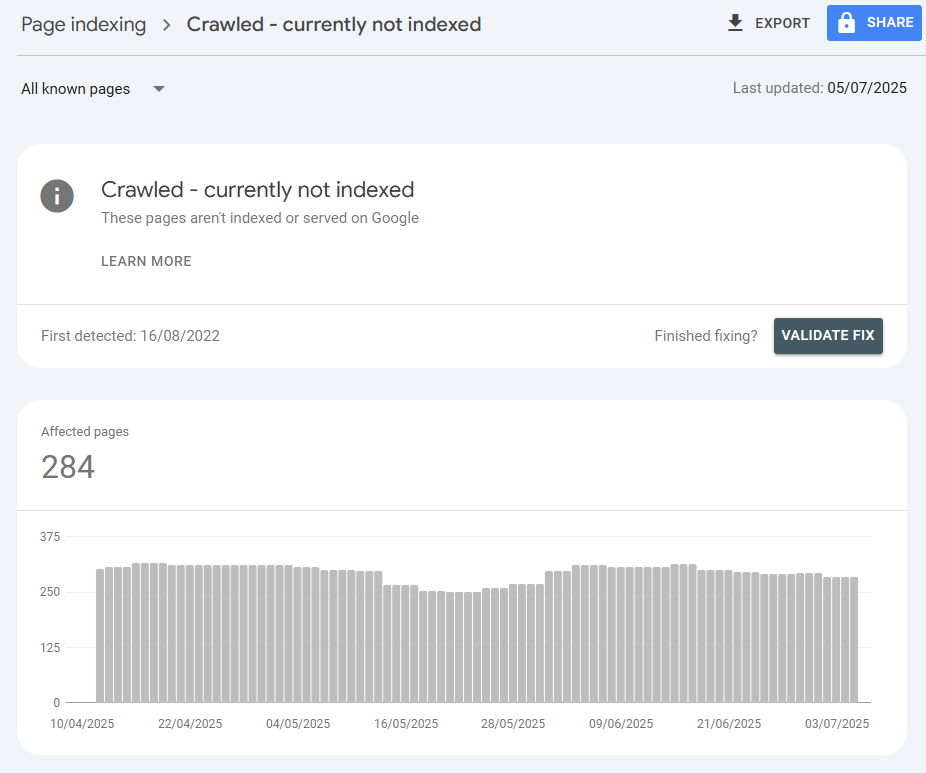

However, when “Crawled, currently not indexed” starts appearing on hundreds or even thousands of long-existing pages, it’s a warning sign. In other words, these pages were once successfully indexed but later dropped out of the index and received the “Crawled, currently not indexed” status.

I observed a case where one of our client’s websites began rapidly losing visibility, and this exact status was at the core of the issue. Upon analysis, I found that pages with thin content and poor structure started receiving this status more frequently. These days, Google takes a tough stance in such cases — it can quickly deindex any pages that lack original content, useful value, clear structure, and internal linking.

Thin content (especially if it’s shorter than competing pages) is interpreted by Google as low-value and is easily thrown into the non-indexable category. The same applies to duplicate pages — if a site has multiple versions of the same content (for example, due to incorrect canonical tags), Google may choose to index only one version, or sometimes none at all.

Sometimes, the cause may lie in site bugs, unnecessary redirects, or paywalled/closed pages that are technically accessible to Googlebot but aren’t intended for users. Google crawls these and immediately excludes them from the index to avoid showing non-target content in search results.

There are also less common secondary causes. If the site responds slowly or frequently returns 5xx errors, Google concludes that crawling is too costly and starts to “minimize damage” — it reduces crawl coverage and prioritizes more valuable or popular pages. Similarly, if your URL structure is overly complex and forces Googlebot to “wander” inefficiently across a maze of pages, this too can contribute to deindexing and assigning the “Crawled, currently not indexed” status. Quite often, the reason is not just one single issue — but a combination of several factors acting together.

Having the types of pages listed in the previous section show up as “Crawled, currently not indexed” is an understandable and logical situation — though of course not a pleasant one.

But there are cases when content pages with sufficient text, proper internal linking, clean URL structure, and no visible technical issues -pages that visually appear to deserve attention — suddenly drop out of the index and receive the “Crawled, currently not indexed” status.

I first noticed this issue about two years ago. At first, I thought it was a temporary glitch in Google’s system. But over time, more data appeared, and the situation became clearer.

Let me illustrate this with two client cases: one content-based review site and one ecommerce site.

In the first case, the review website was publishing a large number of content pages generated using AI, without much effort put into improving or editing them afterward. As a result, the pages were unique in wording but not truly original, since they merely rephrased what had already been published on other websites within the same niche. The actual usefulness of such reviews for readers was highly questionable. In addition, some of the articles weren’t even particularly relevant to the overall theme of the website.

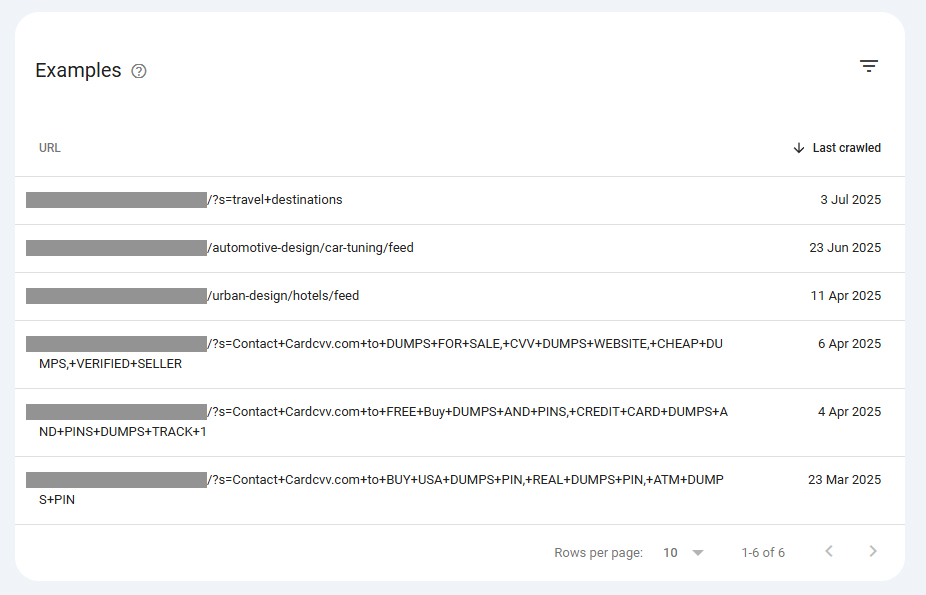

As a result, Google deindexed many of these pages, marking them as “Crawled, currently not indexed”. Out of nearly 1000 original pages on the site, fewer than 300 remained indexed.

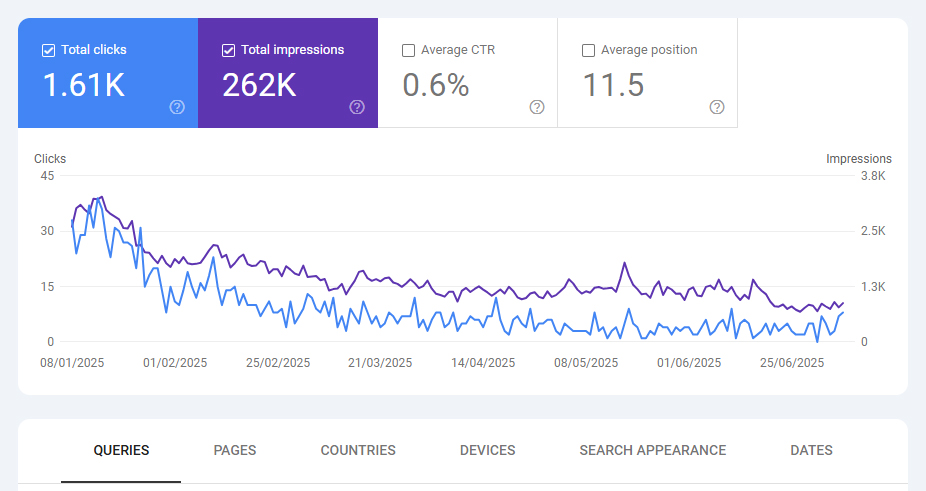

But the key issue is that even those 300 pages still in the index saw a significant drop in traffic right after one of Google’s core updates. Thus, the client’s website received an algorithmic Google penalty for scaling content that provided no real value and usefulness to readers.

The situation with the ecommerce website was different in nature but led to the same outcome. Many product pages contained non-unique content. In most cases, the descriptions were copied from official brand websites or from Amazon. In addition, the content volume was often insufficient — limited to just a few short paragraphs.

As a result, these pages were also removed from the index and marked as “Crawled, currently not indexed”. Over time, the site’s overall organic traffic declined — just like in the first case.

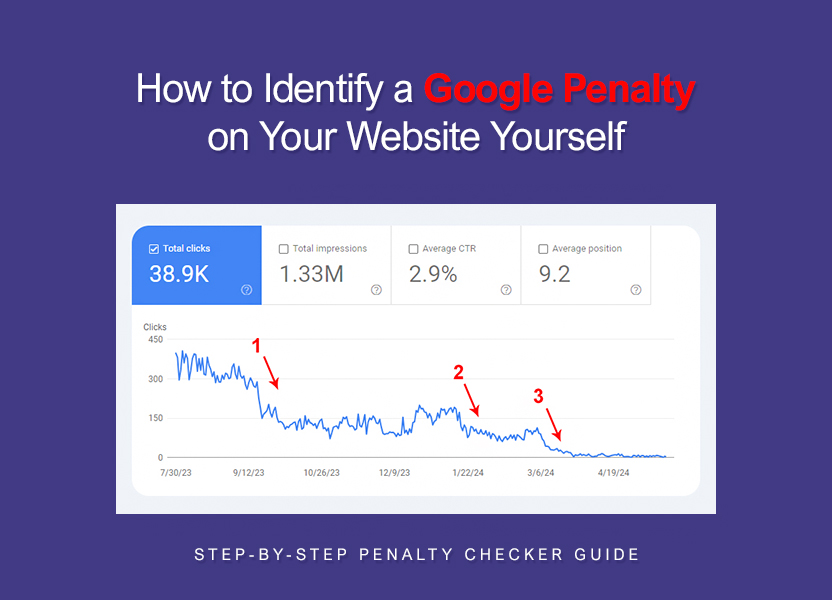

At RecoveryForge, since we deal with many websites affected by Google penalties, I had enough examples to analyze the correlation between mass deindexing of existing pages (marked “Crawled, currently not indexed”) and a general decline in search traffic — even on the pages that remained indexed.

Here are some patterns I observed:

Websites that were later penalized by Google for content issues often had prior problems with content pages being deindexed. And those pages were typically marked as “Crawled, currently not indexed”.

In the vast majority of cases, the root cause was the publication of bare AI-generated content that wasn’t original enough and lacked real value for readers. A user could read essentially the same thing in any other article on the topic published elsewhere.

The second most common cause was insufficient content volume. Google has previously stated that word count alone is not a ranking factor. But in this context, low word count correlates with thin content — pages that don’t provide enough information to satisfy user queries. This is especially problematic when the content is not original, or worse — copied, as was the case with our client’s ecommerce store.

Another important point: the process of pages dropping out of the index with the “Crawled, currently not indexed” label was not immediate. It happened gradually, over the course of several months. And if site owners (or their SEOs) had paid attention to the growing number of such pages, they could have taken action to prevent the Google penalties — before they actually hit.

What should you do if older pages start dropping out of the index with the “Crawled, currently not indexed” label? How can you fix it?

First of all, don’t panic or rush to manually submit them for indexing via GSC — that won’t solve the issue. The first step is to understand why your pages are receiving the “Crawled, currently not indexed” status in your specific case.

Here’s what I recommend doing, step by step:

Step 1 - Basic checks

These are the simplest checks and should be done first — they take very little time:

• Make sure there is no noindex directive on the page (either in meta tags or HTTP headers)

• Check whether the page is blocked in robots.txt

• Verify that the page returns a valid HTTP 200 OK status

• Confirm that the page is actually included in your sitemap, and that the sitemap is submitted in Search Console.

Sometimes the issue is right here — especially if your site uses plugins or auto-generated pages.

Step 2 - Identify which pages dropped from the index

Analyze which pages received the “Crawled, currently not indexed” status. Are these just technical URLs: filters, sorting options, pagination (as shown earlier)? Or are they actual content pages?

If it’s content pages — especially those that previously received traffic — this is already a red flag. In that case, proceed to a more detailed analysis.

Step 3 - Check content uniqueness

Even if you’re certain the content was originally written from scratch, that doesn’t guarantee it’s still unique. It may have been copied and reposted on other websites, social media, forums, or marketplaces. As a result, your original article might now be interpreted by Google as a clone of someone else’s content.

Check these pages using Copyscape or any other available content duplication tool. If matches are found, that could explain the loss of indexing.

Step 4 - Compare and identify patterns

Compare the pages that remained indexed with the ones that dropped out. Based on my observations, pages that remain indexed typically have:

- more overall content

- more original content

- content that is more relevant to the site’s main theme

- better structure

- stronger internal linking

- more external and social signals

You should document your own findings based on this comparison. That’s how you’ll understand exactly why Google deindexed your pages.

Step 5 - Identify content issues

In my experience, the most common reason is a lack of value and usefulness in the content. In such cases, a minor rewrite won’t help — you’ll need to fully rework the content with a focus on substance, depth, and originality.

Here’s what to pay attention to:

Originality

The content should not just be technically unique but should include your own phrasing, observations, conclusions, and experience. AI-generated content (which essentially paraphrases other articles) is often treated by Google as low-quality. Even if it doesn’t directly duplicate existing material, Google may exclude it from the index as secondary and non-valuable.

Informational completeness

Insufficient content volume — especially when paired with poor structure and lack of logical blocks — can be seen as thin content. Articles that fail to provide detailed answers to likely user queries are not considered helpful.

Freshness

Content that contains outdated information or has lost its practical value is often removed from the index. This especially applies to pages with time-sensitive topics, lists, comparisons, and reviews. In such cases, I recommend regularly reviewing the content for freshness and updating it when necessary.

Structure and formatting

Lack of logical and visual structure — no headings, subheadings, lists, tables, or segmented sections — makes content harder to read and lowers its perceived quality. Content should be easy to read and logically organized. Proper tag hierarchy and content blocks can also influence how search algorithms assess the page.

Additional elements

Visual content — images, diagrams, tables, embedded videos, and interactive elements — enhance the perceived value of a page. Their absence isn’t critical, but their presence (especially when relevant) signals to Google that the page might be of higher quality than others. However, if you’re using stock photos (free or paid — it doesn’t matter), Google may also interpret that as non-original content with limited value to users.

Most importantly — your edits and improvements should not be focused on quantity, but on increasing the actual and perceived usefulness of each page. That’s the only approach that can yield results.

Step 6 - Review structure and navigation

If the page isn’t integrated into the site’s structure, that may be one of the reasons Google refuses to index it. For example, the page might not be directly relevant — or only marginally relevant — to the site’s overall theme, which is quite common.

It’s also essential to ensure that the page receives internal links from other thematically related pages — preferably ones that are already indexed and receiving traffic.

The depth of the page within your hierarchy also matters. If it takes more than three clicks from the homepage to reach it, that lowers its priority. Pages that technically exist but are not included in site navigation or in the sitemap are seen by Google as potentially low-priority and may be ignored — especially if they don’t receive external traffic or participate in internal linking.

Step 7 - Check technical accessibility of content

If your page has no content issues, structural problems, or linking gaps, but is still being dropped from the index — you need to check what exactly Googlebot sees when trying to crawl it. Sometimes, the issue is that the page technically exists, but the actual content isn’t accessible to the bot.

Here’s what to check:

Is the content loaded via JavaScript?

If the main content of the page only appears after JS is executed, there’s a strong chance Googlebot didn’t see it. This is especially true for SPA frameworks like React, Vue, or Angular without server-side rendering. Google can render JavaScript — but not always, and not completely. If it downloaded the HTML and it was empty — you’ll get that infamous “Crawled, currently not indexed” status.

Is lazy loading used?

If text, images, or tables load only when scrolling, make sure they are present in the initial DOM. Otherwise, Google might think there’s almost no content on the page.

Are there rendering errors?

Use the URL Inspection Tool in Google Search Console — check the “Page Snapshot” tab to see what Googlebot actually saw. If the screenshot is blank — you have your answer.

Does the mobile version cut off content?

Google indexes sites using mobile-first indexing. If the mobile version hides text, removes blocks, or loads content in chunks — that will also impact indexing.

Do redirects or unusual server responses interfere?

Sometimes developers create “smart” logic — serving different versions of a URL based on user-agent, language, cookies, etc. As a result, Google might receive something you didn’t intend, or even a 403, 302, or 500 error.

Recheck robots.txt and meta-robots directives

Especially if you recently changed anything in your templates. Sometimes pages fall out of the index simply because a bit of code accidentally added noindex or blocked a folder in robots.txt.

If all of this checks out — you can go ahead and request reindexing. If not — Google is seeing a technically empty or problematic page, and no amount of original content will help. In that case, fix the underlying issue first — and only then submit a reindexing request in GSC.

Ivan Bogovik is the founder of RecoveryForge, an SEO agency focused on penalty recovery. With over 16 years in the SEO field, Ivan has extensive experience helping websites recover from Google penalties like Penguin, Panda, and other algorithmic updates. Since 2014, he has successfully guided many websites back to health and regularly shares his insights at SEO events and webinars.

Ready to get rid of your penalty?

Let us know about your issue and we will do everything we can to help your website recover its traffic. Fill out the form at the top of our website and send your inquiry. Our specialists will then get in touch with you.

Do you have any questions?

Not sure what to do?

Don’t worry, just tell us your situation. Ask your questions, and we will provide you with comprehensive answers.