Case Study: Restoring Traffic to a Review Website After the 2022 Product Reviews Update

This was one of the most challenging website recovery cases we’ve handled at RecoveryForge. Yet, it’s a success story we’re proud of.

The difficulty arose from the fact that the website came to us after the client’s team had made significant changes in an attempt to recover from a Google penalty on their own. They reached out to us months later, after multiple unsuccessful attempts to fix the site themselves.

This made our job considerably harder, as our team had to audit the current version of the site and request a detailed list of all the changes the client’s team had made after the penalty.

Client's Website

The client’s website is a classic review website, focused on software reviews with around 500 content pages, all in English. It primarily targets a US audience, though it had some organic traffic from the UK and, to a lesser extent, from India.

The main monetization methods are affiliate partnerships and banner ads.

The website consists of four main types of pages:

- Software review pages (most of the site)

- Ranking pages

- Category pages

- Coupon code pages

According to the client, all content was written exclusively by copywriters, with no AI-generated material involved.

The website was built on WordPress without using drag-and-drop editors, which gave it an advantage in terms of loading speed. All images were automatically optimized by a plugin, helping to keep their size low and improve site performance.

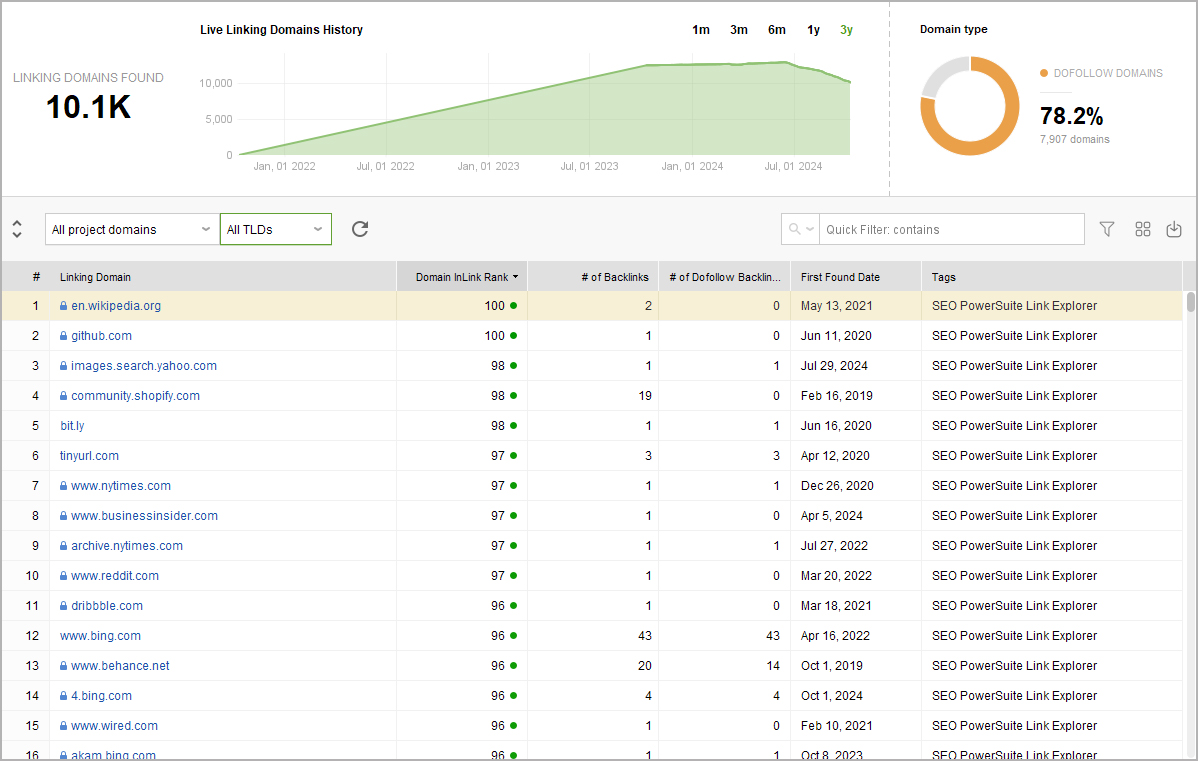

The client also provided details about their link-building strategy, which primarily involved obtaining backlinks through guest and sponsored articles, link insertions in relevant posts, forum and community links, and personal agreements with journalists from reputable media outlets.

In total, the site had backlinks from over 6,000 domains (at the time of receiving the penalty), with many links coming from trusted and authoritative websites.

It was clear that the backlink profile wasn’t the weak point of this website. However, we expected to find some spam links upon deeper analysis.

Drop in Organic Traffic and the Client’s Attempts to Recover It

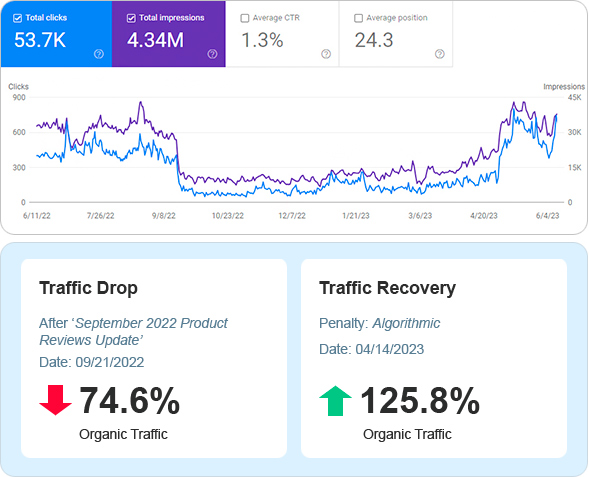

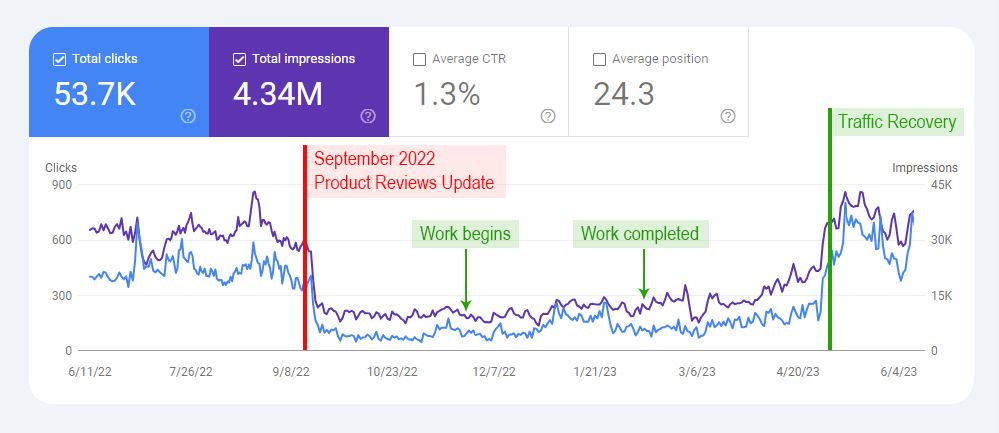

Between September 20 and 25, 2022, the website’s search traffic dropped significantly. In just 5 days, organic traffic fell by 74.6% — before the drop, it averaged 480 clicks per day, but after, it was less than 100.

The client and their team correctly identified that the cause of the traffic decline was an algorithmic Google penalty following the September 2022 Product Reviews Update.

The client decided to rewrite the content on the most visited pages. As a result, the content on each rewritten page increased by an average of 20 — 30%.

Next, the client’s SEOs identified pages that hadn’t received any search traffic over the past 6 months. These pages were removed, with 301 redirects set up to the most relevant pages. In total, 34 pages with zero traffic were removed this way.

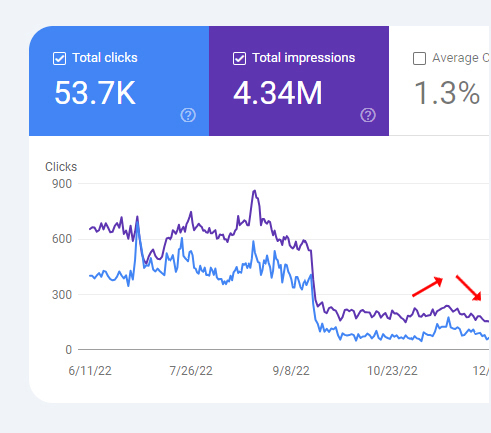

Following these changes, in early November 2022, the website showed its first signs of a steady increase in search traffic. The growth lasted for nearly a week, reaching 170 visitors per day, but then it stalled and gradually declined again.

At this point, based on recommendations from their contacts, the website owner reached out to us for help in identifying the true reasons behind the Google penalty.

We Get to Work

The client reached out to us for help at the end of November 2022, when the website’s search traffic had dropped below 100 visitors per day and continued to decline daily.

At our request, the client provided us with the necessary information about their content creation methods, content authors, and link-building strategy. They also gave us access to Google Search Console and Google Analytics.

Additionally, the client sent us the content from the old pages that had been on the site before the penalty and were either removed or rewritten.

After analyzing this content, we didn’t notice a significant difference compared to the current content on the website. The only major change was that the content was shorter before. The new pages were more detailed and longer, but that was about the only improvement the updated content had.

Template based content

Even before conducting a deep content analysis, we noticed that all the review pages followed the same template structure. Each review page started with an introduction, followed by a table with product features, then a section on pros, and so on.

Each software review followed the exact same structure. Even the list of features in the tables was identical. At the end of each review, there was a section titled something like, “Why should you choose <Name>?”

Most of the H2 sub-titles were identical, with only minimal differences, often just changing the brand name being reviewed.

Content Quality

The content was easy to read and written in a simple, clear way for the readers. It wasn’t bad, but it was clear that it didn’t include any more detailed facts that could set it apart from similar competitor websites.

The introduction was short and included the necessary keywords, which was a positive aspect, since many review websites often suffer from overly long introductions that read like literary works and are usually skipped by readers.

However, during our uniqueness check, we discovered that the first paragraph of each page was also used as the description for Facebook posts and as the description for YouTube videos (for some pages that had their own video reviews).

As a result, if you copy any fragment from the first paragraph and use it in quotation marks as a search query in Google, the first result almost always shows the Facebook or YouTube page, not the client’s website. This was another signal to us that Google likely rated this content poorly.

Additionally, many pages hadn’t been updated in a long time, with the most recent update date showing as 2020, and some even earlier. It was clear that the content needed to be refreshed and improved.

Issues with Page Indexing

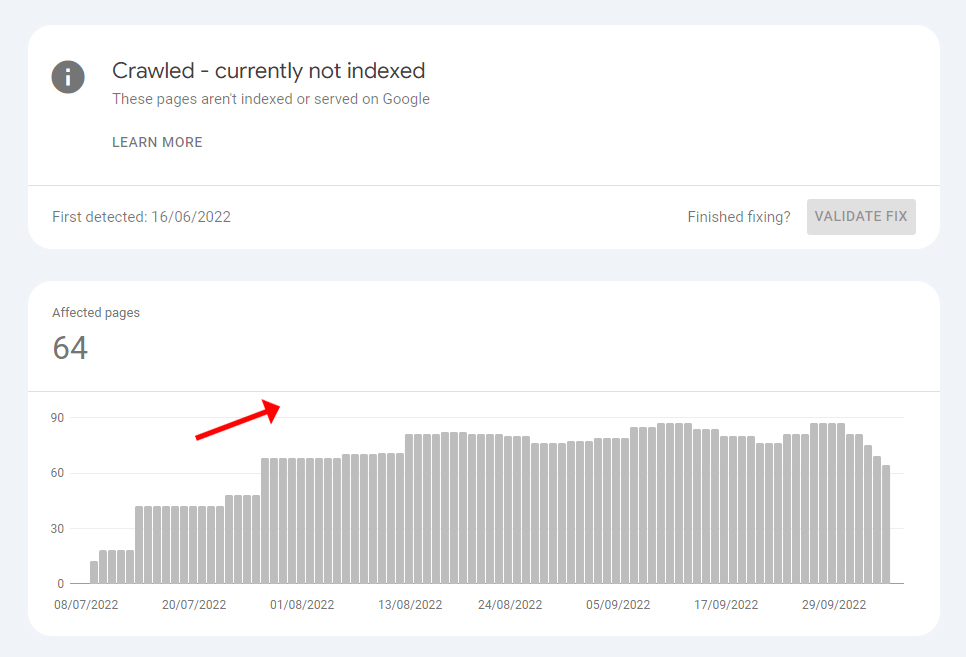

Another indication of weak content on the website was that some pages began disappearing from Google’s index.

In Google Search Console, under the «Page indexing» section, we found a growing number of pages that were crawled but not indexed by Google.

This usually happens in two cases:

- Content quality is too low, and Google decides it’s not worth indexing.

- Content cannibalization. In this case, there are other pages on the site that are more relevant to the main search query than the current page.

Low Text Quantity

In addition to review and ranking pages, the website had coupon code pages. To attract search traffic, many products mentioned on the website had their own individual coupon code page, even if they didn’t actually offer any discount codes.

Aside from the ethical aspect of this, there was the issue of very little text content on these pages. As a result, Google could perceive them as thin content pages. Given the large number of such pages, this could have easily caused problems and even led to a Google penalty for the entire website.

This was the second likely reason for the penalty, after low-quality content.

Content Improvement Recommendations

Remove more pages without search traffic

The client’s team had already removed some of these pages, but it wasn’t enough. This was particularly relevant for pages with low potential to attract search traffic. Each deleted page was checked for external backlinks. If the backlinks existed and their quality was acceptable, the page was redirected via a 301 redirect to the most relevant page.Personalized approach for each page

We suggested moving away from the template-based structure in favor of more personalized content, especially for the review pages. The focus should be on the product itself and its unique features, which should be highlighted in the review. It wasn’t necessary to follow any particular structure. Including a table in the first half of the article was also optional. For some reviews, where appropriate, we recommended using two or more tables. The goal was to create individualized content that fully showcases the pros and cons of the product without being tied to any website standards.Increase article length

When rewriting and expanding articles, we suggested increasing the content length where possible, as long as the quality remained high. This primarily involved adding missing sections and including an FAQ section at the end of the articles.Add more entities to the content

We recommended creating a list of entities and LSI phrases for each article instead of focusing solely on keywords. The more relevant entities included in the articles, the better they would rank.Use original screenshots and images

One of our main recommendations for improving the content was to use original images. We advised against using third-party screenshots and stock images, suggesting instead that the client create their own screenshots and collages for the articles.The client’s team followed this approach to update the content, and for some pages where the content was completely outdated, they rewrote it entirely according to our recommendations.

Issue with Coupon Code Pages

The biggest challenge in working with the client was the coupon code pages, which the client was adamant about not removing, even though most of these pages had minimal or no organic traffic.

Previously, the client’s team had heavily relied on these pages for SEO and believed that removing many of them would lead to an even greater loss of organic traffic.

From our perspective, these pages (most of which contained less than 100 words) were a burden for the website.

Therefore, we suggested that the client analyze the coupon code pages and remove the ones that were low-performing and rarely visited.

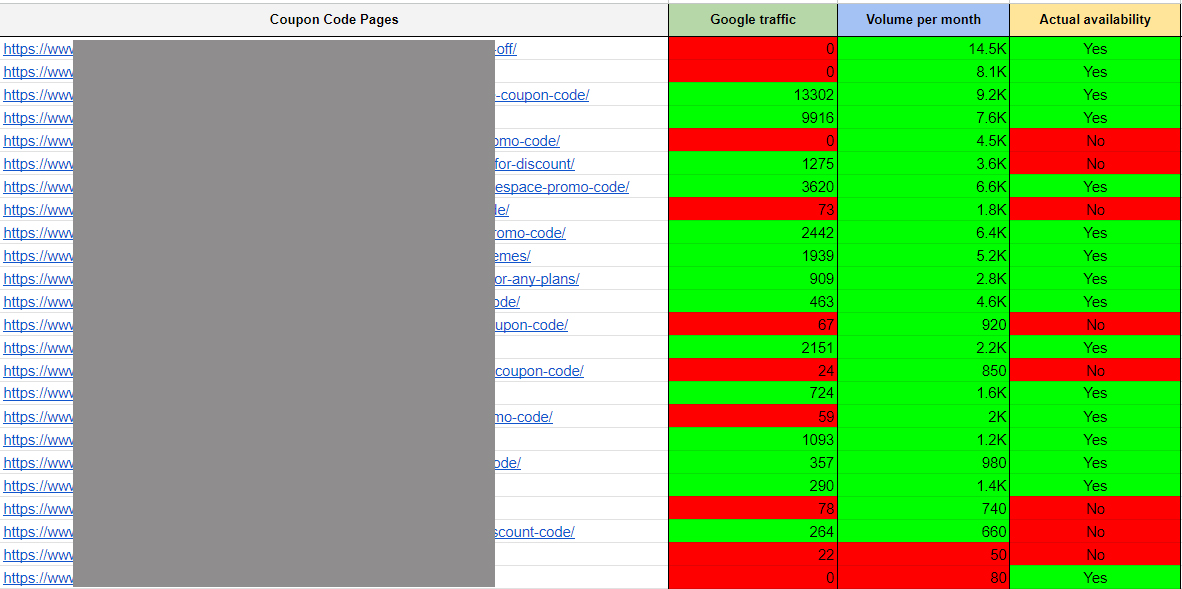

To do this, we proposed compiling them all into a table. In the left column, we would list the URLs of the pages, and in the next three columns, we would include: organic traffic over the past 16 months, the main keyword’s search volume per month, and whether a valid coupon code actually existed.

Based on this analysis, coupon code pages that received two or more red flags in the table were removed and redirected via 301 to other more relevant coupon code pages.

The coupon code pages that had only one or no red flags were kept on the site, but their content was expanded by adding more information about the service/software, its benefits, etc.

In a way, the remaining coupon code pages became more like mini-reviews. To avoid future content cannibalization issues, we also added a backlink from each coupon code page to the corresponding Review Page.

Additional Recommendations

Chaotic Internal Linking Structure

All of the website’s pages had internal links to one another without following a proper SILO structure. We demonstrated how to structure internal linking to concentrate PR weight on the most important pages of the site. We also explained how to identify these key pages.Improving Author Bios

The Author Bios on the website contained very few entities and resembled short descriptions generated by AI software. We provided clear examples and guidance on how author bios should be structured so that Google assessors would have no doubts when reviewing them.Fixing Affiliate Links

The client’s website contained many affiliate links. These links appeared on almost every page in the form of buttons and text hyperlinks. Affiliate links were masked by a plugin (PrettyLinks) by creating short redirect links. We recommended not using plugins for this and instead coding affiliate links manually with rel=»nofollow noopener sponsored.»Removing Spam Backlinks

It wasn’t within our audit’s scope to check all the backlinks in the client’s profile. However, we identified which links were spammy (or appeared to be) and explained the criteria for identifying them.The client’s team, following our recommendations, reviewed their backlink profile and selected those that could harm the website. They then worked on reaching out to the administrators of those websites to request the removal of those links.

As a result, some spammy links were removed, and the rest were added to the Disavow Links Tool in GSC.

Results

One of the factors that positively impacted the website’s recovery was the speed at which the client’s team implemented the necessary fixes. The content took the longest to rewrite and improve.

As the errors were fixed, organic traffic gradually increased, which served as great motivation for the team to keep moving forward. After the April 2023 Reviews Update, the traffic fully recovered and even exceeded the levels before the penalty.

This is a great example of how a thorough audit combined with swift correction of penalty causes can help recover from even long-standing Google penalties. It’s important to stay confident in success and, of course, patiently work on improving the website.

If your website is facing a similar situation and you’ve been trying to remove a Google penalty without success, reach out to us — perhaps we are the ones who can help you.

Ivan Bogovik is the founder of RecoveryForge, an SEO agency focused on penalty recovery. With over 16 years in the SEO field, Ivan has extensive experience helping websites recover from Google penalties like Penguin, Panda, and other algorithmic updates. Since 2014, he has successfully guided many websites back to health and regularly shares his insights at SEO events and webinars.